madness

Things that make you go “aigh!”.

Elon Musk is a dick

0When a platform or a company (or a country, for that matter) becomes more focused on its celebrity leader than on the members or the products or the community, it’s time to leave.

Twitter is now Elon Musk’s private playground, so I no longer want to be a part of it.

I deleted my Twitter account this week. So maybe this personal blog is again the place for me to post my random thoughts.

Native IPv6 on TWC at home

0About a year ago, we switched from AT&T DSL to Time Warner Cable. I bought my own cable modem, a Motorola Surfboard SB6141 (hardware version 8). Time Warner’s web site said that they support the SB6181, but it turns out they only supported some earlier hardware versions of the SB6181. Basically, the modem worked for IPv4, but I found that it did not support IPv6, even though I know Time Warner’s network supports it. To get the modem to work with IPv6, I would have to wait for a firmware upgrade, which is something that Time Warner would have to make available, and then my modem would automatically install the next time it rebooted.

So I set up a cron job to reboot the modem every week. It would curl into the modem’s web interface and press the “reboot” button on the web form. Then it would wait for the modem to come back up, and it would look at the firmware version number. If the version number had changed, I would get an email. Furthermore, it would run rdisc6 eth0 to see if any IPv6 routes were being advertised, and if they were, I would get an email.

Nine months later, I got the email! They had upgraded my modem, and I had native IPv6 support! So I needed to log into my router (a Zotac ZBox C-series mini-sized computer running Ubuntu) and turn off the Hurricane Electric tunnel and configure it to use the native IPv6. This turned out to be easier said than done. I spent the entire day and part of the next doing just that.

What needed to be done

On an IPv4 network, your ISP assigns a single IP address to your router, and you choose an unrouted private subnet to use on your home network (10.x.x.x, 172.16-31.x.x, or 192.168.x.x).

For IPv6, all of your addresses are routable, which means they come from your ISP. But they do assign two subnets to you: one (IA, or Identity Association) is for the router itself, and the other (PD, or Prefix Delegation) is for your home network. Typically, the IA will be a /64 subnet and the PD will be something larger, like a /60 or /56. You can split up that pool of IPs into smaller /64 subnets for each network segment in your home (maybe one for eth1 and another one for wlan0).

First things first

We need to set a few system parameters in order for our router to actually route IPv6 traffic.

-

We have to tell the kernel to forward traffic.

In /etc/sysctl.conf, add the following two lines:

net.ipv6.conf.all.forwarding=1andnet.ipv6.conf.default.forwarding=1. -

We have to accept router advertisements from our upstream.

Normally, if you’ve turned on forwarding, then the kernel will ignore router advertisements. But they’ve added a special flag for routers like ours. Add

net.ipv6.conf.eth0.accept_ra = 2to /etc/sysctl.conf. -

Apply the changes.

These changes will be applied at the next reboot. You can do

sysctl -p /etc/sysctl.confto read those parameters into the kernel immediately.

Stuff I tried that didn’t work

At first, I experimented with the /etc/network/interfaces file and the built-in ISC DHCP client. I could not figure out how to make that do anything. Documentation is sparse and mainly concerned with traditional IPv4 DHCP use cases.

Then I played with the Wide DHCPv6 Client or dhcp6c. It looked promising, because its configuration file had all of the right options. It allowed you to ask for an IA and a PD, and you could specify how to slice up the PD space into smaller subnets for other interfaces. However, when I ran it, I got an (IA) IP address on my external interface, but I never got a (PD) IP address on my internal interface, and I never saw any internal routes added. I spent many hours trying to get this to work. For the record, here is the config that I used:

# /etc/wide-dhcpv6/dhcp6c.conf

interface eth0 { # external facing interface (WAN)

send rapid-commit;

send ia-na 0; # request bender's eth0 network to talk to the router

send ia-pd 0; # request bender's eth1 network to share with the house

};

# non-temporary address allocation

id-assoc na 0 {

};

# prefix delegation

id-assoc pd 0 {

# internal/LAN interfaces will get addresses like this:

# (56-bit delegated prefix):(8-bit SLA ID):(64-bit host portion)

# SLI ID's start with 1, go up to 255 (because SLA-len = 8)

prefix-interface eth1 { # internal facing interface (LAN)

sla-len 8; # bits of "our portion" of the PD subnet

sla-id 1; # eth1 gets sub-network number 1 out of 255 (8 bits)

ifid 1; # bender's eth1 IP address will end with this integer

};

};

What worked – dibbler and some duct tape

There is another DHCPv6 client called “dibbler” that I had heard good things about. So I installed it, and armed with my knowledge learned from dhcp6c, I was able to get a configuration that worked… sort of. It would require some assistance.

With the following configuration, dibbler-client will request an IA and a PD on eth0, and it will create a route on eth1 for its slice of the PD.

# /etc/dibbler/client.conf

log-level 7

downlink-prefix-ifaces "eth1"

inactive-mode

iface eth0 {

ia

pd

}

script "/etc/dibbler/script.sh"

But after dibbler-client runs, the network is still not really ready to use.

- The internal interface does not have an IP address on its slice of the PD.

- The system does not have a default route.

These things can be fixed by a helper script. Fortunately, dibbler allows us to specify a script that will run every time some change takes place. Here is the script that I wrote. It does not take any information from dibbler itself. It simply looks around the system and fills in the missing pieces.

#!/bin/bash

# /etc/dibbler/script.sh

router_iface="eth0"

internal_iface="eth1"

function log () {

printme="$*"

echo "$(date '+%F %T') : $printme" >> /var/log/dibbler/script.log

}

log "started with arguments >> $*"

# check for default route

if [[ $(ip -6 route | grep -c default) -gt 0 ]] ; then

# default route found

log "default route found >> $(ip -6 route | grep default)"

else

# no default route - look for route advertisements

log "default route not found"

router_ip=$(rdisc6 $router_iface | grep '^ *from' | grep -o '[0-9a-f:]\{4,\}')

if [[ -n $router_ip ]] ; then

route_command="ip -r route add ::/0 $router_ip dev $router_iface"

log "adding route >> $route_command"

$route_command

log "return code was $?"

fi

fi

# check for internal network IP

internal_ip="$(ip -6 addr show dev $internal_iface | grep 'scope global' | grep -o '[0-9a-f:]\{4,\}')"

if [[ -n $internal_ip ]] ; then

# internal IP is set

log "internal IP found >> $internal_ip"

else

# internal IP is not set

log "internal IP not found"

prefix="$(ip -6 route | grep $internal_iface | grep -v 'proto kernel' | grep -o '[0-9a-f:]\{4,\}::')"

if [[ -n $prefix ]] ; then

ip_command="ip -r addr add ${prefix}1/64 dev $internal_iface"

log "adding IP >> $ip_command"

$ip_command

log "return code was $?"

# restart radvd

systemctl restart radvd

fi

fi

After the script runs, the router will be able to communicate with the internet using IPv6, and the other machines on the internal network will be able to communicate with the router.

NOTE – The version of dibbler (1.0.0~rc1-1) that comes with Ubuntu 15.10 crashed when I ran it. So I had to download a newer one. At first, I downloaded the source code for version 1.0.1 and compiled it. That seemed to work OK. But later, I grabbed the dibbler-client package (1.0.1) for Ubuntu 16.04 and installed it using “dpkg”. I prefer to install complete packages when I can.

The last step – advertise your new subnet to your network

When the machines on your network come up, they will look for route advertisements from your router. We need a RA daemon to send these out. The most common one is radvd.

While researching this setup, I saw several references to scripts that would modify the radvd config file, inserting the route prefixes that were assigned by the upstream prefix delegation. To me, this idea seemed like yet more duct tape. Fortunately, radvd does not need to be reconfigured when the prefixes change… it is smart enough to figure out what it needs to do. To make this happen, I used the magic prefix “::/64”, which tells radvd to read the prefix from the interface itself.

# /etc/radvd.conf

interface eth1 # LAN interface

{

AdvManagedFlag off; # no DHCPv6 server here.

AdvOtherConfigFlag off; # not even for options.

AdvSendAdvert on;

AdvDefaultPreference high;

AdvLinkMTU 1280;

prefix ::/64 # pick one non-link-local prefix from the interface

{

AdvOnLink on;

AdvAutonomous on;

};

};

Conclusion

That might seem like a lot for something that should “just work”. It turns out that the default ISC DHCP client does “just work” for a simple client machine.

But for a router, we need to be a little more explicit.

- Set up the kernel to forward and accept RAs.

- Set up dibbler to ask for IA and assign the external IP address.

- Set up dibbler to ask for PD and set up a route on internal interfaces.

- Use a helper script to assign IPs on the internal interfaces.

- Use a helper script to make sure the default route is set.

- Use radvd to advertise our new routes to clients in the home network.

I hope this record helps others get their native IPv6 configured.

Moogfest

1This is either a story of poorly-managed expectations, or of me being an idiot, depending on how generous you’re feeling.

Eight months ago, when I heard that Moogfest was coming to Durham, I jumped on the chance to get tickets. I like electronic music, and I’ve always been fascinated by sound and signals and even signal processing mathematics. At the time, I was taking an online course in Digital Signal Processing for Music Applications. I recruited a wingman; my friend Jeremy is also into making noise using open source software.

The festival would take place over a four-day weekend in May, so I signed up for two vacation days and I cleared the calendar for four days of music and tech geekery. Since I am not much of a night-owl, I wanted to get my fill of the festival in the daytime and then return home at night… one benefit of being local to Durham.

Pretty soon, the emails started coming in… about one a week, usually about some band or another playing in Durham, with one or two being way off base, about some music-related parties on the west coast. So I started filing these emails in a folder called “moogfest”. Buried in the middle of that pile would be one email that was important… although I had purchased a ticket, I’d need to register for workshops that had limited attendance.

Unfortunately, I didn’t do any homework in advance of Moogfest. You know, life happens. After all, I’d have four days to deal with the festival. So Jeremy and I showed up at the American Tobacco campus on Thursday with a clean slate… dumb and dumber.

Thursday

Thursday started with drizzly rain to set the mood.

I’m not super familiar with Durham, but I know my way around the American Tobacco campus, so that’s where we started. We got our wristbands, visited the Modular Marketplace (a very small and crowded vendor area where they showed off modular synthesizer blocks) and the Moog Pop-up Factory (one part factory assembly area, and one part Guitar Center store). Thankfully, both of these areas made heavy use of headphones to keep the cacophony down.

From there, we ventured north, outside of my familiarity. The provided map was too small to really make any sense of — mainly because they tried to show the main festival area and the outlying concert area on the same map. So we spent a lot of time wandering, trying to figure out what we were supposed to see. We got lost and stopped for a milkshake and a map-reading. Finally, we found the 21c hotel and museum. There were three classrooms inside the building that housed workshops and talks, but that was not very clearly indicated anywhere. At every turn, it felt like we were in the “wrong place“.

We finally found a talk on “IBM Watson: Cognitive Tech for Developers“. This was one of the workshops that required pre-registration, but there seemed to be room left over from no-shows, so they let us in. This ended up being a marketing pitch for IBM’s research projects — nothing to do with music synthesis or really even with IBM’s core business.

Being unfamiliar with Durham, and since several points on the map seemed to land in a large construction area, we wandered back to the American Tobacco campus for a talk. We arrived just after the talk started, so the doors were closed. So we looked for lunch. There were a few sit-down restaurants, but not much in terms of quick meals (on Friday, I discovered the food trucks).

Finally, we declared Thursday to be a bust, and we headed home.

We’d basically just spent $200 and a vacation day to attend three advertising sessions. I seriously considered just going back to work on Friday.

With hopes of salvaging Friday, I spent three hours that night poring over the schedule to figure out how it’s supposed to be done.

- I looked up all of the venues, noting that several were much farther north than we had wandered.

- I registered (wait-listed) for workshops that might be interesting.

- I tried to visualize the entire day on a single grid, gave up on that, and found I could filter the list.

- I read the descriptions of every event and put a ranking on my schedule.

- I learned – much to my disappointment – that the schedule was clearly divided at supper time, with talks and workshops in the daytime and music at night.

- I made a specific plan for Friday, which included sleeping in later and staying later in the night to hear some music.

Friday

I flew solo on Friday, starting off with some static displays and exploring the venues along West Morgan Street (the northern area). Then I attended a talk on “Techno-Shamanism“, a topic that looked interesting because it was so far out of my experience. The speaker was impressively expressive, but it was hard to tell whether he was sharing deep philosophical secrets or just babbling eloquently… I am still undecided.

I rushed off to the Carolina Theater for a live recording of the podcast “Song Exploder“. However, the theater filled just as I arrived — I mean literally, the people in front of me were seated — and the rest of the line was sent away. Severe bummer.

I spent a lot of time at a static display called the Wifi Whisperer, something that looked pretty dull from the description in the schedule, but that was actually pretty intriguing. It showed how our phones volunteer information about previous wifi spots we have attached to. My question – why would my phone share with the Moogfest network the name of the wifi from the beach house we stayed at last summer? Sure enough, it was there on the board!

Determined to not miss any more events, I rushed back to ATC for a talk on Polyrhythmic Loops, where the speaker demonstrated how modular synth clocks can be used to construct complex rhythms by sending sequences of triggers to sampler playback modules. I kind of wish we could’ve seen some of the wire-connecting madness involved, but instead he did a pretty good job of describing what he was doing and then he played the results. It was interesting, but unnecessarily loud.

The daytime talks were winding down, and my last one was about Kickstarter-funded music projects.

To fill the gap until the music started, I went to “Tech Jobs Under the Big Top“, a job fair that is held periodically in RTP. As if to underscore the craziness of “having a ticket but still needing another registration” that plagued Moogfest, the Big Top folks required two different types of registration that kept me occupied for much longer than the time I actually spent inside their tent. Note: the Big Top event was not part of Moogfest, but they were clearly capitalizing on the location, and they were even listed in the Moogfest schedule.

Up until this point, I had still not heard any MUSIC.

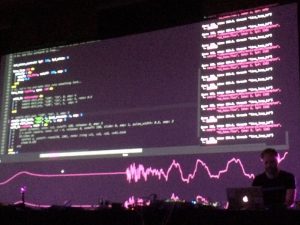

My wingman returned and we popped into our first music act, Sam Aaron played a “Live Coding” set on his Sonic Pi. This performance finally brought Moogfest back into the black, justifying the ticket price and the hassles of the earlier schedule. His set was unbelievable, dropping beats from the command line like a Linux geek.

To wrap up the night, we hiked a half mile to the MotorCo stage to see Grimes, one of the headline attractions of Moogfest. Admittedly, I am not part of the target audience for this show, since I had never actually heard of Grimes, and I am about 20 years older than many of the attendees. But I had been briefly introduced to her sound at one of the static displays, so I was stoked for a good show. However, the performance itself was really more of a military theatrical production than a concert.

Sure, there was a performer somewhere on that tiny stage in the distance, but any potential talent there was hidden behind explosions of LEDs and lasers, backed by a few kilotons of speaker blasts.

When the bombs stopped for a moment, the small amount of interstitial audience engagement reminded me of a middle school pep rally, both in tone and in body language. The words were mostly indiscernible, but the message was clear. Strap in, because this rocket is about to blast off! We left after a few songs.

Saturday

Feeling that I had overstayed my leave from home, I planned a light docket for Saturday. There were only two talks that I wanted to see, both in the afternoon. I could be persuaded to see some more evening shows, but at that point, I could take them or leave them.

Some folks from Virginia Tech gave a workshop on the “Linux Laptop Orchestra” (titled “Designing Synthesizers with Pd-L2Ork“). From my brief pre-study, it looked like a mathematical tool used to design filters and create synthesizers. Instead, it turned out to be an automation tool similar to PLC ladder logic that could be used to trigger the playback of samples in specific patterns. This seemed like the laptop equivalent to the earlier talk on Polyrhythmic Loops done with synth modules. The talk was more focused on the wide array of toys (raspi, wii remotes) that could be connected to this ecosystem, and less about music. Overall, it looked like a very cool system, but not enough to justify a whole lot of tinkering to get it to run on my laptop (for some reason, my Ubuntu 15.10 and 16.04 systems both rejected the .deb packages because of package dependencies — perhaps this would be a good candidate for a docker container).

The final session of Moogfest (for me, at least) was the workshop behind Sam Aaron’s Friday night performance. Titled “Synthesize Sounds with Live Code in Sonic Pi“, he explained in 90 minutes how to write Ruby code in Sonic Pi, how to sequence samples and synth sounds, occasionally diving deep into computer science topics like the benefits of pseudo-randomness and concurrency in programs. Sam is a smart fellow and a natural teacher, and he has developed a system that is both approachable by school kids and sophisticated enough for post-graduate adults.

Wrap Up

I skipped Sunday… I’d had enough.

My wife asked me if I would attend again next year, and I’m undecided (they DID announce 2017 dates today). I am thrilled that Moogfest has decided to give Durham a try. But for me personally, the experience was an impedance mismatch. I think a few adjustments, both on my part and on the part of the organizers, would make the festival lot more attractive. Here is a list of suggestions that could help.

- Clearly, I should’ve done my homework. I should have read through each and every one of the 58 emails I received from them, possibly as I received them, rather than stockpiling them up for later. I should have tuned in more closely a few weeks in advance of the date for some advanced planning as the schedule materialized.

- Moogfest could have been less prolific with their emails, and clearly labeled the ones that required some action on my part.

- The organizers could schedule music events throughout the day instead of just during the night shift… I compare this festival with the IBMA Wide Open Bluegrass festival in Raleigh, which has music throughout the day and into the nights. Is there a particular reason why electronic music has to be played at night?

- I would enjoy a wider variety of smaller, more intimate performances, rather than megawatt-sized blockbuster performances. At least one performance at the Armory was loud enough to send me out of the venue, even though I had earplugs. It was awful.

- The festival could be held in a tighter geographic area. The American Tobacco Campus ended up being an outlier, with most of the action being between West Morgan Street and West Main Street (I felt like ATC was only included so Durham could showcase it for visitors). Having the events nearer to one another would mean less walking to-and-from events (I walked 14½ miles over the three days I attended). Shuttle buses could be provided for the severely outlying venues like MotorCo.

- The printed schedule could give a short description of the sessions, because the titles alone did not mean much. Static displays (red) should not be listed on the schedule as if they are timed events.

- The web site did a pretty good job of slicing and dicing the schedule, but I would like to be able to vote items up and down, then filter by my votes (don’t show me anything I have already thumbs-downed). I would also like to be able to turn on and off entire categories – for example, do not show me the (red) static events, but show all (orange) talks and (grey) workshops.

- The register-for-workshops process was clearly broken. As a late-registerer, my name was not on anyone’s printed list. But there was often room anyway, because there’s no reason for anyone to ever un-register for a workshop they later decided to skip.

- The time slots did not offer any time to get to and from venues. Maybe they should be staggered (northern-most events start on the hour, southern-most start on the half-hour) to give time for walking between them.

All in all, I had a good time. But I feel like I burned two vacation days (and some family karma/capital) to attend a couple of good workshops and several commercial displays. I think I would have been equally as happy to attend just on Saturday and Sunday, if the music and talks were intermixed throughout the day, and did not require me to stick around until 2am.

The obstinate trash man

3This weekend’s pet project was to set up Ubuntu Studio to run on my Macbook Pro.

Ubuntu Studio is a Linux distribution, based on Ubuntu, that comes with lots of audio and video software installed and configured. I have been wanted to play with Ardour, an open source digital audio workstation, and although it will run on a Mac, it runs much better on Linux. So I downloaded and burned a copy of the Ubuntu Studio “Live DVD”. This would allow me to test drive Ubuntu Studio on the Macbook without installing anything on the Macbook’s hard disk. It worked wonderfully, and so I decided to make a bootable “Live USB” stick as well. The Live USB stick acts just like the Live DVD, except it also allows you to save files back to the USB stick (obviously, you can’t save files to a read-only DVD). So I would be able to do my studio work in Linux and save my work when I reboot back into OSX.

I was greeted with a very weird bug in Ubuntu Studio’s desktop system. Whenever I tried to delete a file, I would get the following error:

Unable to find or create trash directory

What a weird error message!

Ubuntu Studio uses the XFCE desktop environment, which follows the Free Desktop’s “Desktop Trash Can Specification“. Yes, there are people who write specifications about how trash cans are supposed to work. There is a utility called “gvfs-trash” that actually handles moving deleted files to the appropriate trash can area. You can run this command from a shell prompt.

$ gvfs-trash somefile

Error trashing file: Unable to find or create trash directoryThere’s that same error message.

I ran the same command with “strace” to figure out what it was doing, and I did a little bit of Googling. I found this blog post, which told me most of what I needed.

The gvfs-trash system wanted to find a directory called “.Trash-999” in the top level of the filesystem. It wanted 999 because my user ID number was 999 (run the “id” command to see what your user ID number is). Inside the /.Trash-999 folder, it also wanted two sub-folders named “files” and “info”. All three of these needed to have 700 permissions.

Here’s a one-liner that will do it all:

u=$(id -u) ; g=$(id -g) ; sudo mkdir -m700 /.Trash-$u ; sudo chown $u:$g /.Trash-$u ; mkdir -m700 /.Trash-$u/{files,info}After that, the desktop system could remove files OK, and the gvfs-trash command could as well.

That gvfs-trash command might make a good alias!

alias rm='gvfs-trash'Kingston: too scared to engage

0A while back, I ordered a couple of microSD cards from Kingston. They came with these nifty little USB card readers.

Here is a picture.

The microSD card is in the front, and there are two of the USB card readers right behind it (one is upside-down). In the back, for comparison of scale, I show a Sandisk Cruzer Micro USB stick.

These tiny little USB card readers are very nice, attractive, and very well built. You can see that the USB plug only has the inner part with the four contacts. They left off the rectangular metal shell that most USB plugs have.

The reader can be fastened to your key ring using the little nylon string that is tied into a small hole in the end. They have gone so far as to make the hole a little bit recessed, so you can still plug in the microSD card while the string is attached.

Overall, it is a beautiful design.

However, I am not a fan of the little nylon strings. I was hoping that I could use a metal fastener to attach this reader to my key chain. A metal fastener would not jiggle around or get tangled like the string would. And it would also keep the microSD card from dropping out and getting lost.

So I wrote a short note to the folks at Kingston.

Hi guys,

I am currently in the process of moving from SD cards to micro SD cards, and I just bought a few from Kingston. These came with some nice micro SD card readers (see the photo attached).

I have an idea that might make these readers better for some of your customers (and for those who do not need the change, it would be no worse than what you have now).

Your current design is just long enough so that an inserted micro SD card is flush with the back edge. You can tell that someone took some great care to design the slot where the little string goes, because it still fits when a card is inserted, and it provides a little bit of pressure against the card, to keep it from falling out.

However, if the micro SD card reader were about 1.5 mm longer, the string hole would stick out past the end of an inserted micro SD card. That is, a card could be plugged in, and you could still see through the hole. This would make it possible to use other connectors besides the little strings. Personally, I like to use small split ring keyrings. Or like I have pictured here, you could use a crab claw clasp.

The little strings are a hassle on a keyring, especially since they are holding something so light in weight. The strings sometimes get tangled up in my keys. And the string does not really ensure that the card won’t slip out… it helps by adding some friction, but it does not BLOCK the card from coming out.

It’s something to consider. I hope you will. Your little reader looks to be the tiniest and most “robust” looking of the micro card readers out there. I think this little improvement would put it way over the top as the best reader to have.

Thanks, and all the best.

Alan Porter

I was very surprised when I received a reply from Kingston.

Dear Alan,

Thank you for your interest in Kingston Technology. Also, thank you for your input and suggestion for Kingston’s product line.

Kingston greatly values our customers’ opinions and insight. Unfortunately due to today’s litigious society, Kingston is forced to discard suggestions pertaining to new and future products. Therefore, we will be unable to move forward with your input and/or suggestion. We hope you understand our position.

If you have any other questions or require further assistance, feel free to contact us directly at 800 xxx-xxxx. We are available M-F, 6am-5pm, PT. I hope this information is helpful. Thank you for selecting Kingston as your upgrade partner.

Please include your email history with your reply

Best Regards,

xxxxxx xxxxxx

Customer Service/Sales Support

Kingston Technology Company

What a nice gesture… a personal thank-you note.

But what’s this part all about??

Unfortunately due to today’s litigious society, Kingston is forced to discard suggestions pertaining to new and future products.

I was shocked. They thanked me for writing, but they simply won’t allow themselves to listen to their biggest fans, because they’re scared that someone might sue them for listening.

What a horrible statement about our society! This is the exact polar opposite of the principles of sharing and feedback and continuous improvement that I am used to dealing with in the open source community.

Kingston lives in a feedback-free vacuum, fingers in their ears, and they blame us all for their uncooperative attitude. What does this say about us? Has the greatest nation in the world slowly grown old and senile, becoming scared of its own shadow? Will my children grow up to be scared to talk to strangers, scared to have a genuine dialog with another human, scared to actually accept criticism and suggestions, scared that someone would sue them, scared that they might tarnish their sacred brand? Is this the world that I want to leave to my children? Hell, no. So I made sure to write back to Kingston and register my disapproval of their spineless policy.

I hope some Chinese company will run with the elongation improvement. They don’t seem to be crippled by the imaginary legal threats from their own customers like Kingston seems to be.

I should note:

- I still like Kingston and their products. But this extreme risk aversion is the wrong way to go.

- My “improvement” has now been published (here on this blog), and so it is now officially “prior art” and can not be patented. That means that companies — Kingston included — are free to use this idea without fear of being hit up on charges of patent infringement.

- The newer business tactic is to actively engage customers, creating a dialog with them, and letting them feel like they are contributing; not to blow them off and blame it on society.

Personally, I hope we will begin to see less of this corporate (and personal) scaredy cat culture in America.

Chrome and LVM snapshots

0This is crazy.

I was trying to make an LVM snapshot of my home directory, and I kept getting this error:

$ lvcreate -s /dev/vg1/home --name=h2 --size=20G /dev/mapper/vg1-h2: open failed: No such file or directory /dev/vg1/h2: not found: device not cleared Aborting. Failed to wipe snapshot exception store. Unable to deactivate open vg1-h2 (254:12) Unable to deactivate failed new LV. Manual intervention required.

I did some Googling around, and I saw a post from a guy at Red Hat that says this error had to do with “namespaces”. And by the way, Google Chrome uses namespaces, too. Followed by the strangest question…

I see:

“Uevent not generated! Calling udev_complete internally to avoid process lock-up.”

in the log. This looks like a problem we’ve discovered recently. See also:

http://www.redhat.com/archives/dm-devel/2011-August/msg00075.html

That happens when namespaces are in play. This was also found while using the Chrome web browser (which makes use of the namespaces for sandboxing).

Do you use Chrome web browser?

Peter

This makes no sense to me. I am trying to make a disk partition, and my browser is interfering with it?

Sure enough, I exited chrome, and the snapshot worked.

$ lvcreate -s /dev/vg1/home --name=h2 --size=20G Logical volume "h2" created

This is just so weird, I figured I should share it here. Maybe it’ll help if someone else runs across this bug.

Are you a human?

1I got an email from Ebay saying my password had been compromised and so my account had been disabled. I spent a couple of hours trying to straighten it out, and in the process, I had an online chat with their help desk.

I kept wondering if the agent, “Sheryl”, was a real person or just some program on Ebay’s web servers. So I asked her “which comes first, Halloween or Thanksgiving?”

She got it right… on the second guess. Her answer, though, proved to me that she was not a bot. My guess was that she was in a call center in India. However, I suppose she could’ve been Canadian – their Thanksgiving is in October. But I am betting on India.

apt-xapian-index

2When it comes to searching, there seems to be two battling camps: the ones that prefer to index stuff in the middle of the night, and the ones that just want to search when you need to search. The problem is that, many times, “in the middle of the night” does not end up being “when you’re not using the computer”. The other problem is that this sort of indexing operation can often completely cripple a machine, by using a lot of RAM and completely slamming disk I/O.

As far back as Windows 3.1, with it’s FindFast disk indexing tool, I have been annoyed by indexing processes that wake up and chew your hard disk to shreds… just in case you might want to search for something later.

What a stupid idea.

The latest culprit in Ubuntu is apt-xapian-index, which digs through your package list information, assembling some treasure trove of information that was apparently already on the disk, if you ever needed to ask for it.

Solution:

sudo apt-get remove apt-xapian-index

A better long-term solution:

If you have information that you would like to be indexed for faster retrieval later, do the indexing upon insertion, not periodically. That is, when you apt-get install a package, set a trigger to update the relevant bits of your package index at that time.

Born in the USA

4We can all thank Bruce Springsteen for planting the idea in our heads that a person has to be born in the United States in order to be eligible to become president.

Article II of the US Constitution clearly states the requirements, that a president must be “a natural born Citizen”. Nowhere does it state that the person must be born in the United States. It is true that most people who are born in the US are granted citizenship at birth. It is also true that people who are born outside of the US, from one or more US citizen parents, can also be granted US citizenship at birth.

When my daughter was born in Singapore, many of my friends made the comment that “she can never become president”. However, shortly after her birth, the US Consulate in Singapore presented us with a “Consular Report of Birth of a Citizen of the United States of America”, or Form FS-240. This form clearly declares that my daughter was born a US Citizen, and it is recognized by the US government as proof.

I bring this up because of the current scuttlebutt, claiming that Barack Obama was not born in the United States, and therefore can not legitimately act as president. I am not going to get into that argument.

But I do want to clarify that being born in the US is not a requirement for citizenship, and it is not a requirement for becoming president.

I can only hope that Audrey has this same problem in 30 years.

Do not pass? Go? Do not collect $200?

1On my morning commute to work, I travel along a five-lane highway (two lanes in each direction, plus a shared turn lane). There is a bus stop in front of a large apartment complex, and the bus picks up a huge bunch of kids there.

Every morning, this south-bound bus stops in front of the apartment complex, and several dozen kids get on, taking their sweet time (as kids do). All of the south-bound cars are blocked while the bus is stopped.

At this time, I am usually wondering why that bus does not pull into the apartment complex’s driveway. After all, they are blocking a major roadway during a busy morning commute time.

But it gets worse… not only are all of the SOUTH-bound cars stopped. But the NORTH-bound cars are stopped as well!

North Carolina law does not require the drivers in the opposing lane to stop. But most drivers are not that familiar with the details of the traffic laws. And I suppose that when faced with this choice — either err on the side of stopping when not necessary, or err on the side of passing a stopped school bus — most people would take the more conservative option.

The North Carolina Driver’s Handbook can be downloaded from the DMV’s web site.

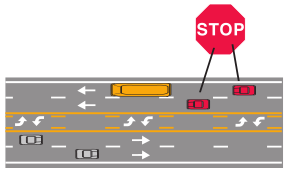

On page 43, you’ll see this picture:

And this is the explanation:

Roadway of four lanes or more with a center turning lane: When school bus stops for passengers, only traffic following the bus must stop.

Of course, it also goes on to say:

Children waiting for the bus or leaving the bus might dart out into traffic. Even when the school bus is not in sight, children at a bus stop sometimes will run into the street unexpectedly. Always be careful around school buses and school bus stops.

So, as always, common sense does apply.

I have considered what I might do if I happened to be the first north-bound car to approach as that school bus stopped in the south-bound lane. Would I keep going (with caution, of course)? Or would I stop, just because my fellow citizens might sneer at me for “breaking the law” and for driving with a reckless disregard for out children’s safety?

THINK of the CHILDREN!

How would a police officer react if he were driving right behind me?

I am not saying that stopping for the bus is a bad thing. But I am amused to see how people react when the rules are ambiguous, or when they are incompatible with what seems like a universal blanket rule (Thou shalt stop). Would you want to be the one who follows the letter of the law, but who appears to be a scofflaw?

Internet lie: “in stock”

0Apparently, on the Internet, the term “in stock” means something completely different than it does in the real world.

What was I saying?

2Now that I am married and have two kids, I find that I have to edit my stories down to under a minute and a half. Otherwise, the end of the story just never makes it out.

“Hi Honey, how was your day at work?”

“I have to tell you about this new tool we discovered today. We were installing our network software on a cluster of machines, which is usually pretty tedious and time consuming. And then one of the guys pulls out this live CD, and …”

“Daddy, is rice a vegetable or a fruit?”

“Hey kids, put that stuff down and wash your hands and face… now!”

“My friend Drew says that Megan won’t talk to Carter because his sister is mean!”

“I think the dog just threw up.”

What was I saying again? Oh yeah, 90 seconds. Sigh.